After years of working in software development, I’ve noticed two patterns that seem to pop up everywhere and consistently mess things up. I don’t think these are official methodologies but I have seen these names called out: Deadline-Driven Development and Resume-Driven Development.

Both of these approaches might look reasonable on paper, but in practice, they usually lead to burned-out developers, buggy software, and projects that take way longer to maintain than they should have taken to build.

Let me tell you about these patterns, why I think they’re problematic, and how they compare to some better approaches like Test-Driven Development and this new and emerging pattern called Specification-Driven Development.

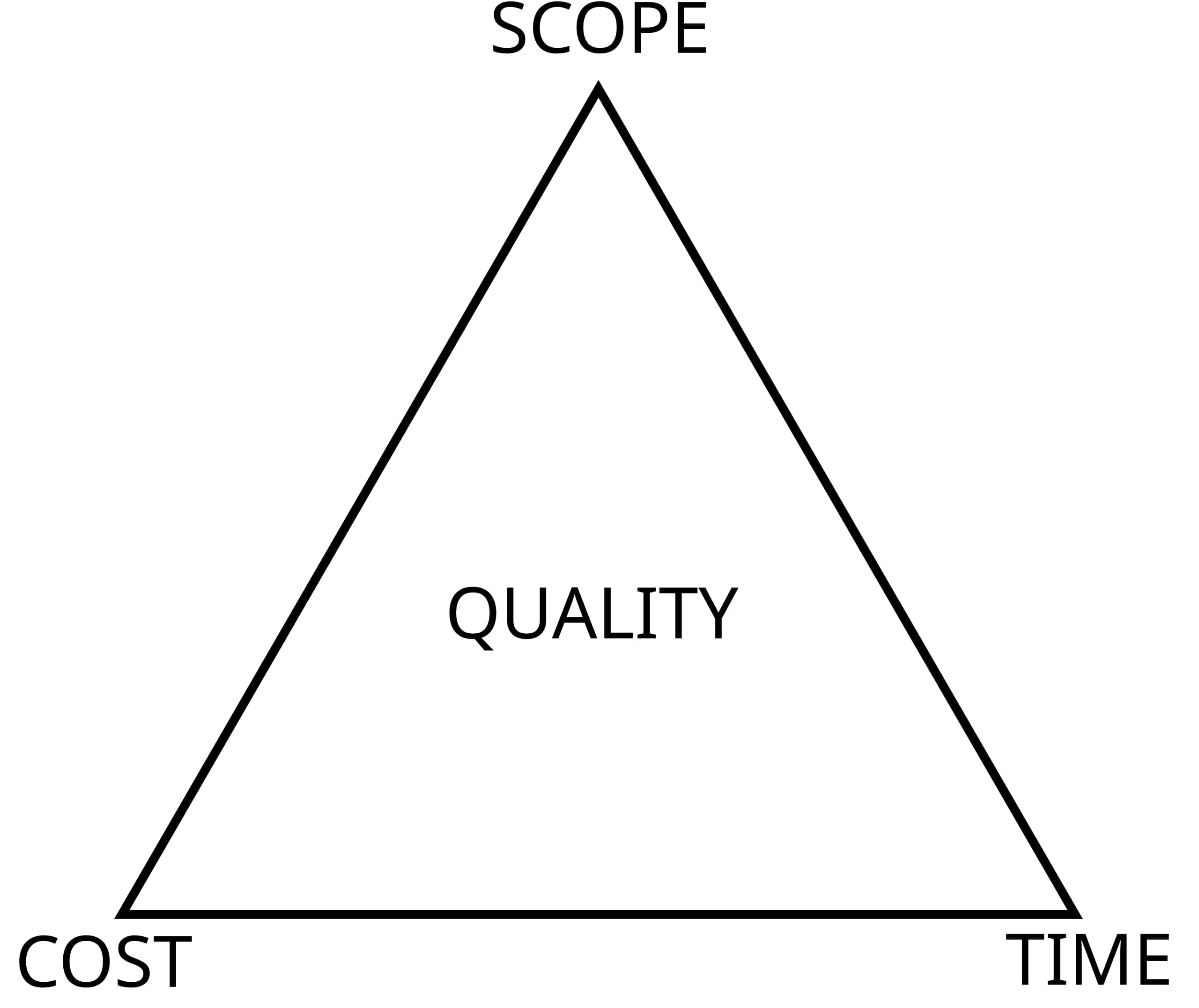

The Iron Triangle of Project Management

Before we get into the specific patterns, let me share a fact: every software project lives in this weird triangle where you have time, quality, and cost. The thing is, you can only really nail two of these at once—the third one is going to suffer.

Source: Wikipedia

Source: Wikipedia

Understanding this constraint is crucial because different development approaches prioritize different dimensions, often with unintended consequences.

Deadline-Driven Development: The Pressure Cooker

In Deadline-Driven Development basically someone in leadership decides “we need to ship by [insert date here]” and then everything gets planned backwards from that deadline.

There could be a genuine reason behind the date, or it could be that it is truly arbitrary to match something else in flight. Regardless of what it is, the conversations are typically top-down in such a way that it becomes a non-negotiable to begin with.

Now since the date for release has already been decided, that doesn’t mean all the time leading to that date would purely be for feature development. There’s a plethora of things that needs to be done beyond the actual development. So basically, the time spent in development may be 50% or less of the total time until the deadline.

The Story of a Typical DDD Project

Picture this: You’re sitting in a meeting room, and your product manager walks in with that look. You know the one—the “I have news” look that’s never actually good news.

“We need to launch by March 15th,” they say. “The board meeting is coming up, and we need to show progress.”

Now, here’s the thing. This project was originally scoped for six months with eight developers. But now it’s January, and you have four developers. The math doesn’t work, but somehow everyone pretends it does.

So what happens? The roadmap gets created backwards from March 15th. Features get squeezed into whatever time is left. Testing becomes “we’ll do it in production.” Code reviews become “just ship it, we’ll fix it later.”

I am obviously exaggerating here, but you get the idea of what I’m trying to convey.

The DDD Cycle of Destruction

This creates this awful cycle that I’ve seen play out way too many times:

- Arbitrary Deadline Setting: Someone announces a date based on business needs, investor pressure, or just wanting to look good

- Backwards Roadmap Creation: Everything gets crammed into whatever timeline is left

- Resource Underestimation: Teams get stretched way too thin

- Quality Compromise: Testing, reviews, and good architecture decisions get skipped

- Technical Debt Accumulation: All those shortcuts come back to bite you

- Developer Burnout: People start quitting because the pressure is unsustainable

The Hidden Costs

Here’s the kicker—while DDD might look like it saves time initially, the hidden costs are brutal:

- Rework Costs: It is more expensive to fix bugs in production than during development.

- Developer Turnover: Teams under constant deadline pressure have much higher attrition rates.

- Technical Debt: Those shortcuts compound like credit card interest.

- Customer Satisfaction: Poor quality leads to support nightmares and customer churn.

Resume-Driven Development: The Overengineering Trap

Now Resume-Driven Development happens when someone “new” joins a team and decides everything needs to be rebuilt with the latest, shiniest and modern technology stack.

This generally comes from a tech person, a new VP of Engineering who joined from another tech company, an Architect who was brought in from another internal team to help with a rather complex project or a Tech Lead who acquired the ownership of a feature due to a re-org.

Essentially, it would be someone who can assert authority of the need and has the buy-in from leadership to execute the proposed vision.

The Microservices Trap

Let me give you a specific example I’ve seen:

Original System: A monolithic application serving about 10,000 users. It’s working fine. The team understands it. Deployments are simple. Debugging is straightforward.

RDD Decision: “Let’s break this into microservices for scalability and maintanability!”

Process Followed

- Create new repositories for each microservice module

- Create additional repositories for common modules for communication, shared data structures, log management and publishing, etc.

- Implement Code Controls, Pull Request Templates, Deployment Pipelines for each repository.

- Database per microservice, manual constraints management

Side-Effects of the Microservices Trap

- Much more complex debugging

- Complexity with Async Communication

- Bugs with Eventual Consistency

- Distributed Monitoring Issues

- Message Sequencing/Ordering Problems

Test-Driven Development: The Proven Foundation

Now, let’s talk about something that actually works. Test-Driven Development (TDD) is a methodology that’s been around for decades and has proven its worth time and time again.

The TDD cycle is beautifully simple:

- Red: Write a failing test

- Green: Write minimal code to pass the test

- Refactor: Improve code while keeping tests green

Why TDD Actually Works

Here’s what I love about TDD:

Quality Assurance: Tests serve as executable specifications. You know exactly what your code is supposed to do because you wrote the test first.

Design Improvement: Writing tests first forces you to think about your API design. You end up with cleaner, more usable interfaces.

Regression Prevention: Once you have a comprehensive test suite, you can refactor with confidence. No more “did I break something?” anxiety.

Documentation: Tests serve as living documentation. New team members can understand what the code does by reading the tests.

Confidence: There’s something magical about having a green test suite. You know your code works.

Specification-Driven Development: The Next Evolution

Specification-Driven Development (SDD) is the next evolution in the AI and Agentic world. It is equally good for Humans as it may be for AI Agents. It is basically TDD but applied to the entire development process, not just individual functions.

The SDD Process

Here is how SDD would work:

- Specification Creation: Write detailed, unambiguous requirements before you start coding

- Acceptance Criteria: Define clear, testable success conditions

- Implementation: Write code that satisfies the specifications

- Validation: Continuously verify that your implementation matches the specs

There are various open source projects helping implement the methodology for Agentic Coding, but you can use them without Agentic Coding tools. I have perosonally tried Spec-Kit from Github and it does help with the end-to-end process, minimizing the initial friction.

Why SDD Makes Sense

I think SDD could solve a lot of the problems I’ve seen:

Alignment: Specifications ensure development actually aligns with business goals. No more “I thought you wanted X” conversations.

Communication: Clear specifications reduce misunderstandings between stakeholders, developers, and testers.

Quality: Detailed requirements lead to better implementations. You can’t build the wrong thing if you know exactly what the right thing looks like.

Maintainability: Well-specified systems are easier to maintain because everyone understands what they’re supposed to do.

Onboarding: New team members can understand systems faster when there are clear specifications to read.

SDD vs Traditional Approaches

Let me compare these approaches:

| Aspect | DDD | RDD | TDD | SDD |

|---|---|---|---|---|

| Quality Focus | Low | Variable | High | Highest |

| Maintainability | Poor | Poor | Good | Excellent |

| Team Satisfaction | Low | Variable | High | High |

| Long-term Cost | High | High | Medium | Low |

| Time to Market | Fast | Slow | Medium | Medium |

The thing I like about SDD is that it addresses the root cause of a lot of problems such as unclear requirements and misaligned expectations.

The Path Forward: Choosing the Right Approach

So, what should you do? Here are my thoughts based on team size and situation:

For Small Size Teams

- Focus on TDD: Get your testing culture right first. It’s easier to establish good habits when the team is small.

- Avoid RDD: Stick to proven technologies. You don’t have the bandwidth to deal with unnecessary complexity.

- Realistic Deadlines: Plan with buffer time. Small teams can’t absorb unexpected problems as easily.

For Medium Size Teams

- Implement SDD: Start creating detailed specifications. This is where communication becomes critical.

- Gradual TDD Adoption: Introduce testing incrementally. Don’t try to change everything at once.

- Architecture Reviews: Have architectural decisions review to prevent over-engineering.

For Large Size Teams

- Full SDD Implementation: You need comprehensive specification processes to keep everyone aligned. Maybe introduce Agentic/Automated processes for specifications, corrections and implementation.

- Microservices Consideration: Only when you actually need them. Don’t do it just because it’s cool or because lambdas are in the trend.

- Distributed TDD: Figure out how to do testing across service boundaries. Easier said that done, but not impossible.

Wrapping Up: Breaking the Cycle

Im my opinion Deadline-Driven Development and Resume-Driven Development aren’t just bad practices, they are the symptoms of deeper organizational issues.

DDD reflects poor planning and unrealistic expectations. RDD indicates a lack of focus on actual business value.

The solution isn’t just adopting TDD or SDD. It’s creating a culture that values:

- Realistic Planning: Deadlines based on actual capacity and complexity, not wishful thinking.

- Technology Pragmatism: Choosing tools based on fit, not trends.

- Quality Investment: Understanding that quality saves time and money in the long run.

- Team Well-being: Recognizing that burned-out developers produce poor code.

- Continuous Learning: Regular retros and process improvement.

By moving away from deadline and resume-driven approaches toward specification and test-driven methodologies, teams can deliver higher quality software while maintaining developer satisfaction and long-term project sustainability.

The choice isn’t between fast delivery and quality, it’s between sustainable development practices that deliver value consistently versus short-term gains that create long-term problems.